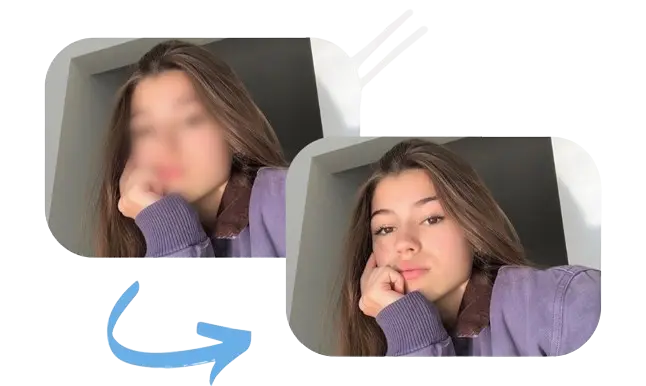

In today’s world of artificial intelligence and powerful image editing tools, it’s no surprise that apps are emerging that claim to do the impossible — such as “uncensoring photos” or “removing blurred areas” to reveal hidden content. At first glance, tools like AI Photo Uncensoring, Reveal Hidden Parts Pro, and others sound like futuristic tech you’d see in spy movies.

However, after hours of research, testing, and reviewing cybersecurity reports, one thing became crystal clear: these tools are mostly fake, often unethical, and in some cases, downright dangerous.

In this article, I’ll break down:

- What uncensored photo remover tools claim to do

- How they (supposedly) work vs. what happens

- Real-world case studies

- Cybersecurity threats

- Legal and moral consequences

- Safer, ethical alternatives for legitimate image enhancement

Whether you’re a tech enthusiast, a blogger, or just someone who stumbled across these tools out of curiosity, read this before you try anything.

What Are Uncensored Photo Remover Tools?

At their core, uncensored photo remover tools are software programs or mobile apps that claim to “restore” censored or redacted parts of an image. This includes:

- Blurred-out body parts

- Pixelated or low-res areas

- Covered sections with black bars or emoji stickers

- Any image that’s been intentionally obscured

These tools are mostly marketed toward NSFW curiosity and often target adult images. Their flashy ads promise results like:

“Reveal hidden areas of any image using AI”

“Remove black bars in one click”

“See what’s behind the blur with AI photo uncensoring”

Let’s be honest — the wording is designed to attract attention, not deliver truth.

How These Tools Claim to Work

Here’s where things get technical. Most apps or tools promoting censorship removal throw around impressive-sounding terms like:

1. AI Inpainting

AI inpainting refers to algorithms that fill in missing parts of an image using surrounding data. This is commonly used in old photo restoration and object removal tasks.

2. GANs (Generative Adversarial Networks)

GANs are a type of AI where two models (a generator and a discriminator) compete to create increasingly realistic results. These are used in deepfake technology and image generation.

3. Super-Resolution Algorithms

These are used to upscale images and enhance details. Some apps claim they can “restore” censored parts using this method — they can’t.

4. Pre-Trained NSFW Models

Some tools claim to be trained on massive datasets of adult content to “rebuild” body parts — but this simply means they’re generating fake anatomy, not revealing real images.

In short, these tools don’t recover anything. They generate a best guess based on other data. That’s not reality — it’s fiction created by code.

The Reality: AI Can’t Reveal What Isn’t There

Let’s be clear: once an image is censored, the original visual information is gone.

If someone pixelated a face or blacked out a body part, that data has been destroyed. AI cannot recover it. It’s like trying to unscramble eggs — it just doesn’t work.

What these tools do is fabricate new content based on:

- Patterns from similar images

- Pre-trained datasets (usually questionable adult content)

- Generic human anatomy approximations

What You See Isn’t What Was There

Even if the AI produces a “realistic-looking” result, it’s a complete fake — an AI hallucination, not the truth.

Case Study: The DeepNude Controversy

Back in 2019, a program called DeepNude went viral for claiming to undress photos of women using AI. The app was crude, disrespectful, and widely criticized — but it worked just enough to scare people.

DeepNude didn’t uncover real nudity — it overlaid GAN-generated images trained on adult content onto the original photo, producing a semi-realistic but entirely fabricated nude version.

The backlash was fierce. The developers pulled it offline within days. Still, the damage was done, and countless clones popped up across shady corners of the internet.

“These AI models don’t reveal the truth. They hallucinate it. And that makes them dangerous,”

— Rachel Coldicutt, Tech Ethics Researcher

Legal and Ethical Consequences

Using these tools isn’t just sketchy — it could be illegal in many jurisdictions.

Privacy Violation

Creating fake nude content without someone’s consent is a serious violation of their privacy, even if it’s AI-generated. Countries like the UK, the US, and the EU are cracking down with new digital consent laws.

Deepfake Offenses

Many of these tools fall into the deepfake category. Distributing AI-generated fake images can lead to:

- Jail time

- Civil lawsuits

- Social media bans

Cyber Harassment

Even attempting to “uncensor” a public figure or acquaintance’s image can be considered digital harassment under laws like the U.S. Cyber Civil Rights Initiative.

“Consent doesn’t end when AI enters the picture. Creating fake nudes without consent is still exploitation.”

— Danielle Citron, Legal Scholar on Deepfake Law

Cybersecurity Dangers: Why Most Are Malware Traps

Here’s something most people don’t know: the majority of these tools are fake or dangerous. I tested several of them (on an isolated test system), and here’s what I found:

Common Red Flags

- APK downloads from sketchy websites

- Fake “Free AI Photo Uncensor” tools with high install permissions

- Apps that ask for your gallery, contacts, camera, and location

- Payment walls that never deliver any output

- Hidden ransomware in cracked tools

Real Reviews from Forums:

“I installed a tool from a Telegram group. It bricked my phone. Cost me 5k to get my data back.”

– Anonymous user on r/PrivacyTools

“All it did was paste generic boobs on the censored part. I feel stupid for even trying it.”

– Reddit user on r/deepfakes

Legitimate Alternatives for Image Restoration

If your goal is ethical, such as restoring damaged images, enhancing resolution, or repairing blurred text,h ere are real, safe tools you can use:

| Tool | Purpose | Trust Level |

|---|---|---|

| Remini | AI photo sharpening and enhancement | ✅ Safe |

| Photoshop AI | Fill, restoration, object removal | ✅ Trusted |

| Topaz Labs | Upscaling and noise reduction | ✅ Professional |

| Runway ML | Video + image editing with AI | ✅ Creative Use |

None of these tools pretend to “uncensor” — and that’s exactly why they’re safe, respected, and legal.

FAQs: What People Are Asking

Are uncensored photo remover tools real?

No. They generate fake visuals using AI predictions. They cannot recover rethe al hidden content.

Can AI remove censorship from blurred photos?

No. Blurring or blacking out an image destroys data. AI can only guess, not recover.

Are photo censorship removal apps illegal?

If used on private or non-consensual images — absolutely. It could lead to legal trouble in most countries.

Are these tools malware?

Many of them are. Be cautious with any tool that requires unknown APKs or permissions.

Final Verdict: Don’t Fall for the Illusion

Uncensored photo remover tools are often scams, hoaxes, or ethical disasters. They prey on curiosity, exploit misunderstood AI tech, and offer nothing real in return — except potential malware, lawsuits, or reputational damage.

If you’re serious about digital media or AI editing, stick with tools that respect creativity, privacy, and legal boundaries.

Final Scorecard

| Tech Accuracy | ⭐☆☆☆☆ (1/5) | AI just guesses; no actual uncensoring |

| Ethical Soundness | ☆☆☆☆☆ (0/5) | Serious consent & privacy issues |

| Cybersecurity | ☆☆☆☆☆ (0/5) | High risk of malware & phishing |

| Practical Use | ⭐⭐☆☆☆ (2/5) | Only for fictional image generation |

| Overall Safety | ⭐☆☆☆☆ (1/5) | Best avoided altogether |

Closing Thoughts

Curiosity is natural, especially with the rise of powerful AI. But tech should be used to empower, not exploit. Always question tools that sound too good to be true. If it claims to “reveal what’s hidden,” it’s probably not just fake — it might also be harmful.

Want to write about this topic on your tech blog? I can provide SEO meta titles, schema markup, or even video script outlines. Let me know, and I’ll tailor the next version for your platform.